With the growing prevalence of Dell PowerEdge Servers, Dell PowerEdge R710 servers gradually caught Ethernet users’ eyes for its competitive price, superb quality and low power consumption. As one part of the Dell PowerEdge Select Network, Intel Ethernet Network Adapters are high performance adapters for 1/10/25/40GbE Ethernet network connections. This article would give a brief introduction to Dell R710 server, Intel NDC for Dell R710 and optics for Intel NDC.

About Dell R710 Server

The Dell PowerEdge R710 is a 2U rack server that can support up to two quad- or six-core Intel Xeon 5500 and 5600 series processors and to eight SATA or SAS hard drives, giving you up to 18TB of internal storage. It has 18 memory slots allow for a maximum of 288GB of memory, allowing the R710 to support and memory-intensive task you can throw at it. It has low power consumption and high performance capacity, which helps you save both money and time. We would provide detailed information about Dell PowerEdge R710 in the next two parts.

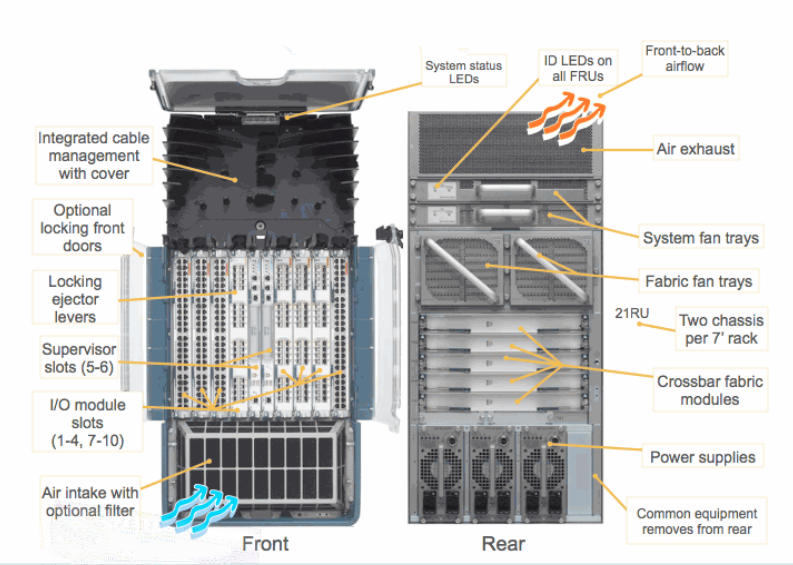

Figure1: Dell R710 2U Rack Server(Resource: www.DELL.com)

Dell R710 server adapted Intel’s E5-2600 processors, which have up to 20MB cache and up to 8 cores versus the 5500 and 5600 processors which max out at 12MB Cache and 6 cores. These 8-core processors are ideal for increased security, I/O innovation and network capabilities, and overall performance.

The R710 supports a high level of internal storage maxing out at 18TB. That includes up to six 3.5″ hard drives or eight 2.5” hard drives. It provides support for 6Gb/s SAS, SATA, and SSD drives.

The R710 sports Dell’s new iDRAC6 management controller, which has a dedicated network port at the rear of the server. It provides a web browser interface for remote monitoring and viewing the status of critical server components, and the Enterprise upgrade key brings in virtual boot media and KVM-over-IP remote access.

Based on Symantec’s Altiris Notification Server, the Management Console takes over from Dell’s elderly IT Assistant and provides the tools to manage all your IT equipment, instead of just Dell servers. Installation is a lengthy process, but it kicks off with an automated search process that populates its database with discovered systems and SNMP-enabled devices.

Intel network Adapters for Dell PowerEdge R710 servers

According to Dell PowerEdge R710 datasheet, the Dell PowerEdge R710 servers support dual port 10GB enhanced Intel Ethernet server adapter X520-DA2. This Dell Intel Ethernet Network Daughter Card X520/I350 provides low cost, low power flexibility and scalability for the entire data center. The Intel Ethernet Network Daughter Card X520-DA2/1350-T2 provides two 1000BA

SE-T ports and two 10G SFP+ ports as shown in Figure 2 to support 1GbE/10GbE Ethernet network connectivity.

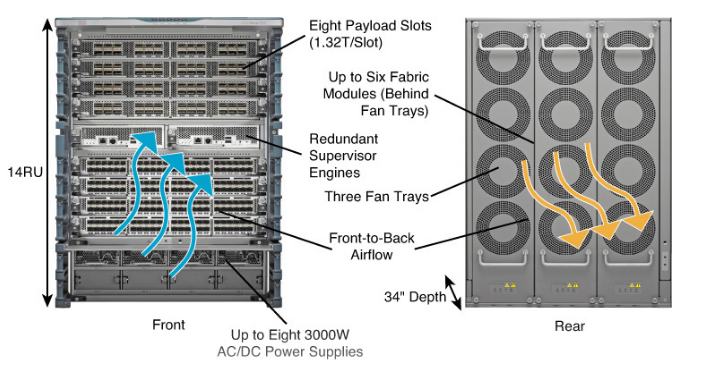

Figure2: Intel Ethernet Network Daughter Card X520-DA2

Cables And Optical Modules for Intel NDC X520-DA2

As we mentioned before, the Intel Ethernet Network Daughter Card X520-DA2 provides two 1000BASE-T ports and two 10G SFP+ ports respectively. We can plug two Intel compatible SFP transceivers into the two 1G ports on the Intel NDC X520-DA2 respectively to achieve 1G network connection. Likewise, we can also connect Intel compatible SFP+ transceiver to the 10G port on the Intel card for making 10G data transferring. In addition, we can also use Direct Attach Cable (DAC) to achieve 10G network connectivity, such as 10Gb fiber optic cable, Intel 10G SFP+ DAC cable. DAC cables are suitable for data transmission over very short link length while optical modules are more appropriate for longer transmission distance.

Figure3: Intel NDC X520-DA2 with 10G optical modules

Conclusion

The Dell R710 server with the high performance of Intel Ethernet Network Daughter Card X520-DA2 offers you a 2U rack to efficiently address a wide range of key business applications. With the great Intel NDC card, it also provides you a perfect solution for 1G and 10G Ethernet network connectivity. You can rest assured to enjoy a low consumption but high capacity server to keep your business.

Related Articles:

Can We Use Third-party Dell SFP for Dell Switches?

Dell Powerconnect 2700 Vs. 2800 Series Switches