In today’s high-tech and data-driven environment, network operators face an increasing demand to support the ever-rising data traffic while keeping capital and operation expenditures in check. Incremental advancements in bandwidth component technology, coherent detection, and optical networking have seen the rise of coherent interfaces that allows for efficient control, lower cost, power, and footprint.

Below, we have discussed more about 400G, coherent optics, and how the two are transforming data communication and network infrastructures in a way that’s beneficial for clients and network service providers.

What is 400G?

400G is the latest generation of cloud infrastructure, which represents a fourfold increase in the maximum data-transfer speed over the current maximum standard of 100G. Besides being faster, 400G has more fiber lanes, which allows for better throughput (the quantity of data handled at a go). Therefore, data centers are shifting to 400G infrastructure to bring new user experiences with innovative services such as augmented reality, virtual gaming, VR, etc.

Simply put, data centers are like an expressway interchange that receives and directs information to various destinations, and 400G is an advancement to the interchange that adds more lanes and a higher speed limit. This not only makes 400G the go-to cloud infrastructure but also the next big thing in optical networks.

What is Coherent Optics?

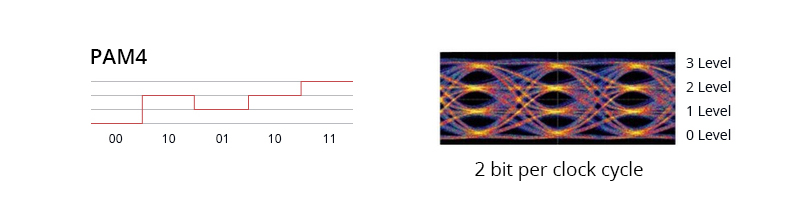

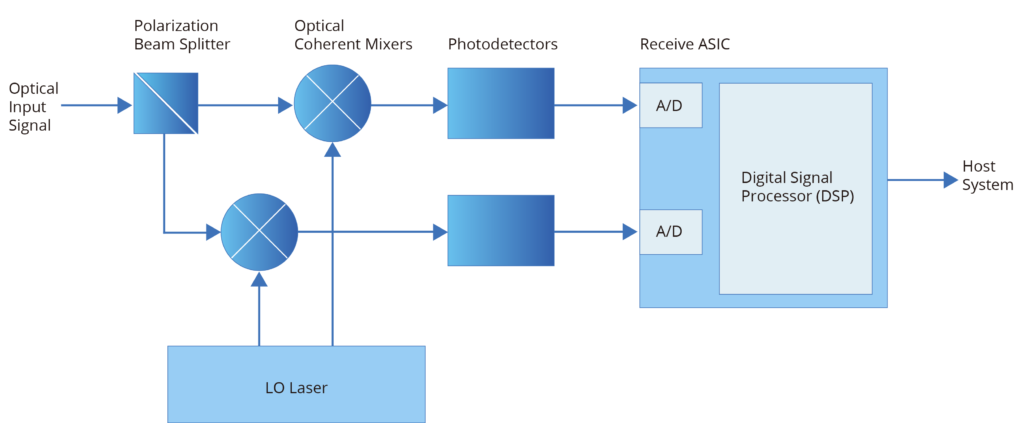

Coherent optical transmission or coherent optics is a technique that uses a variation of the amplitude and phase or segment of light and transmission across two polarizations to transport significantly more information through a fiber optic cable. Coherent optics also provides faster bit rates, greater flexibility, modest photonic line systems, and advanced optical performance.

This technology forms the basis of the industry’s drive to embrace the network transfer speed of 100G and beyond while delivering terabits of data across one fiber pair. When appropriately implemented, coherent optics solve the capacity issues that network providers are experiencing. It also allows for increased scalability from 100 to 400G and beyond for every signal carrier. This delivers more data throughput at a relatively lower cost per bit.

Fundamentals of Coherent Optics Communication

Before we look at the main properties of coherent optics communication, let’s first understand the brief development of this data transmission technique. Ideally, fiber-optic systems came to market in the mid-1970s, and enormous progress has been realized since then. Subsequent technologies that followed sought to solve some of the major communication problems witnessed at the time, such as dispersion issues and high optical fiber losses.

And though coherent optical communication using heterodyne detection was proposed in 1970, it did not become popular because the IMDD scheme dominated the optical fiber communication systems. Fast-forward to the early 2000s, and the fifth-generation optical systems entered the market with one major focus – to make the WDM system spectrally efficient. This saw further advances through 2005, bringing to light digital-coherent technology & space-division multiplexing.

Now that you know a bit about the development of coherent optical technology, here are some of the critical attributes of this data transmission technology.

- High-grain soft-decision FEC (forward error correction):This enables data/signals to traverse longer distances without the need for several subsequent regenerator points. The results are more margin, less equipment, simpler photonic lines, and reduced costs.

- Strong mitigation to dispersion: Coherent processors accounts for dispersion effects once the signals have been transmitted across the fiber. The advanced digital signal processors also help avoid the headaches of planning dispersion maps & budgeting for polarization mode dispersion (PMD).

- Programmability: This means the technology can be adjusted to suit a wide range of networks and applications. It also implies that one card can support different baud rates or multiple modulation formats, allowing operators to choose from various line rates.

The Rise of High-Performance 400G Coherent Pluggables

With 400G applications, two streams of pluggable coherent optics are emerging. The first is a CFP2-based solution with 1000+km reach capability, while the second is a QSFP DD ZR solution for Ethernet and DCI applications. These two streams come with measurement and test challenges in meeting rigorous technical specifications and guaranteeing painless integration and placement in an open network ecosystem.

When testing these 400G coherent optical transceivers and their sub-components, there’s a need to use test equipment capable of producing clean signals and analyzing them. The test equipment’s measurement bandwidth should also be more than 40-GHz. For dual-polarization in-phase and quadrature (IQ) signals, the stimulus and analysis sides need varying pulse shapes and modulation schemes on the four synchronized channels. This is achieved using instruments that are based on high-speed DAC (digital to analog converters) and ADC (analog to digital converters). Increasing test efficiency requires modern tools that provide an inclusive set of procedures, including interfaces that can work with automated algorithms.

Coherent Optics Interfaces and 400G Architectures

Supporting transport optics in form factors similar to client optics is crucial for network operators because it allows for simpler and cost-effective architectures. The recent industry trends toward open line systems also mean these transport optics can be plugged directly into the router without requiring an external transmission system.

Some network operators are also adopting 400G architectures, and with standardized, interoperable coherent interfaces, more deployments and use cases are coming to light. Beyond DCI, several application standards, such as Open ROADM and OpenZR+, now offer network operators increased performance and functionality without sacrificing interoperability between modules.

Article Source:Coherent Optics and 400G Applications

Related Articles:

Typical Scenarios for 400G Network: A Detailed Look into the Application Scenarios

How 400G Ethernet Influences Enterprise Networks?

ROADM for 400G WDM Transmission